Intersecting Business Needs to Storage Systems

Selecting Appropriate Storage Technologies In GCP

This article examines the various considerations that data engineers have when mapping business requirements to storage systems. By the end of the article, you’ll have a better understanding of the diverse business needs that go into selecting the right storage technology.

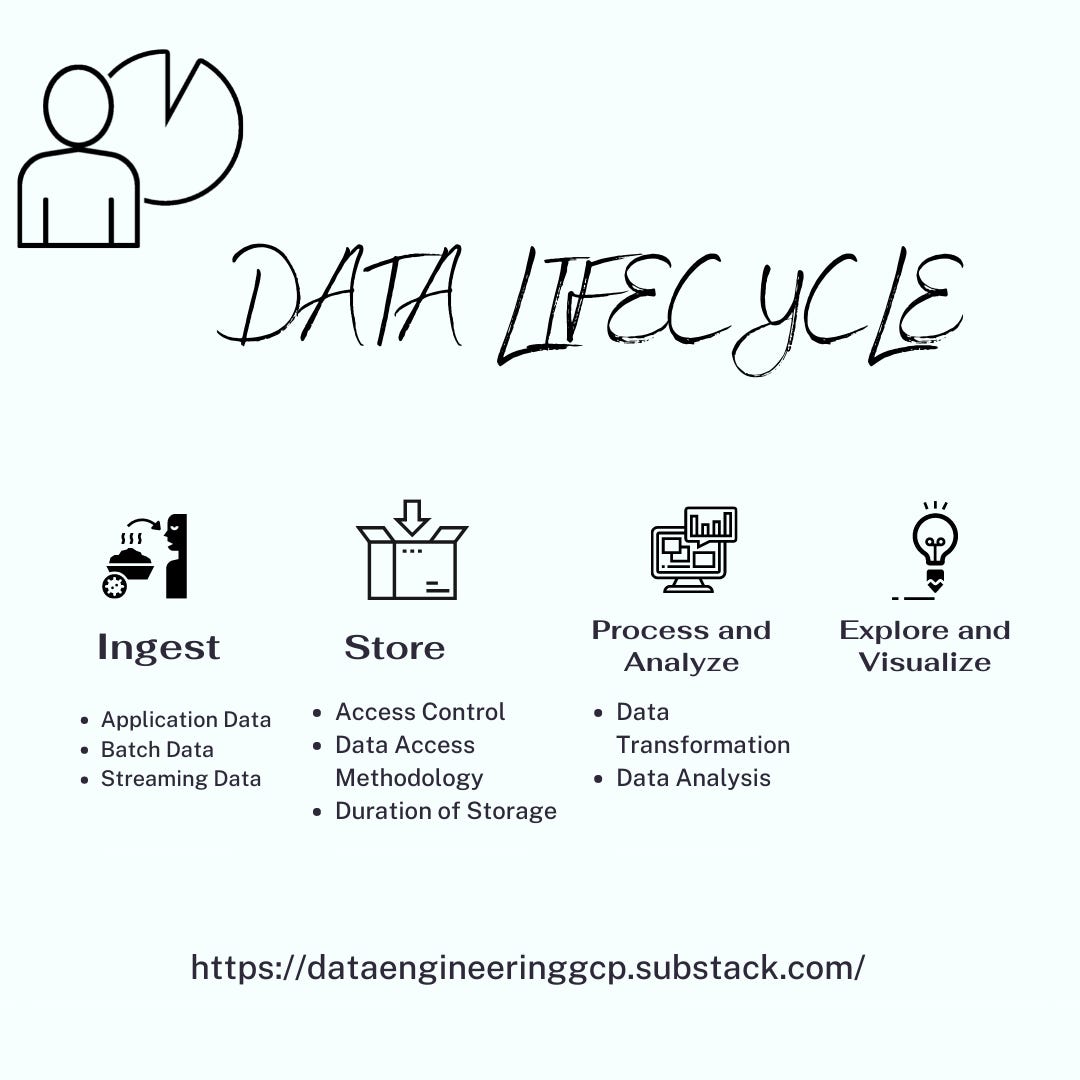

Business needs are the starting point for selecting a data storage solution. Data engineers use different storage systems for various objectives. The data lifecycle stages for which a storage system is employed heavily influence the storage system you should select.

The data lifecycle comprises four stages:

Ingest

Store

Process and analyse

Explore and visualise

1. Ingest

Ingestion is the initial stage in the data lifecycle, and it comprises getting data and delivering it into GCP.

The three main ingestion modes with which most data engineers operate are:

Application data

Streaming data

Batch data

Application Data

Application data may be from applications, particularly mobile apps, and sent to backend services.

Examples include:

Transactions generated by an online retail application.

Users’ clickstream data when reading articles on a news website.

Log data from a server.

For example, services operating on Compute Engine, Kubernetes Engine, or App Engine can ingest application data. In addition, Google Cloud Operations, or one of the GCP-managed databases, such as Cloud SQL or Cloud Datastore, can also store application data.

Streaming Data

Streaming data is a collection of data that is sent in brief messages and are continually sent from the data source.

Examples include:

An Internet of Things gadget that transmits data every minute.

Data from virtual machines showing CPU usage rates and memory consumption.

Stream ingestion services must cope with data that is perhaps tardy or absent. Streaming data may be ingested using Cloud Pub/Sub.

Batch data

Batch data is ingested in large quantities, like in files. Uploading files of data exported from one program to be processed by another is an example of batch data intake.

Examples include:

Weekly operational report for a production forecast.

Log data from apps stored in a relational database and exported for use in a stream processing pipeline.

Google Cloud Storage is commonly utilised for batch uploads. Users may also use the Cloud Transfer Service and Transfer Appliance when uploading vast amounts of data.

2. Store

The storage step involves persisting data to a storage system from which we can retrieve it later in the data lifecycle.

Some Factors to Consider when Choosing a Storage System

How access controls are implemented, whether at the database level or a finer-grained one,

The duration of storage

How the data is accessed, whether row-based or column-based,

Access Control

A data confidentiality level determines the nature of access control we want to implement.

Cloud SQL and Cloud Spanner can restrict access to tables and views for a dataset. So, for example, some users may be able to update, while others may only be able to view, and some may not have access. Above is an example of fine-grained access control.

Users with the admin role can restrict access to Cloud Storage based on a bucket and the objects stored in the bucket. For example, suppose a file in the bucket is accessible to a user. In that case, it will be accessible to all the data in that file. Above is an example of coarse-grained access control.

Note: You can use the service’s security features and access controls. Just be sure to consider all access control requirements and how a storage system fits into those requirements.

Data Access Methodology

Users may access data in a variety of ways. For example, online transaction processing systems frequently use filtering criteria to search for specific entries.

Cloud SQL and Cloud Datastore allow for query functionality needed for e-commerce applications.

Cloud storage is excellent for storing data accessed in bulk, such as machine learning models.

Duration Of Storage

When selecting data storage, consider how long users will hold the data. Some data are transitory. e.g., data needed momentarily by an application running on a Compute Engine.

Depending on the best use case, users may store data needed for a longer time in Cloud Storage or BigQuery.

Data that is scarcely used is best suited for either relational or NoSQL databases.

Consider the data retention policy of your data when choosing a particular storage service. For example, cloud Storage is suitable for archiving ageing data and can be imported back to another database when needed.

3. Process and Analyse

For the process and analysis stage, data is changed into formats allowing spontaneous querying or other sorts of analysis.

Data Transformation

Transformations cut across data cleansing, which is the act of identifying and fixing inaccurate data. Some cleansing actions are based on the intended data type.

To determine inaccurate data, we may use business logic. Some business logic rules are straightforward, such as the requirement that the graduation year must not be less than the admission year or that the transaction date must not be earlier than the date transaction opens.

Normalisation and standardisation of data are additional examples of transformations. For example, an application may expect phone numbers in Nigeria to have a three-digit area code (234). If a phone number lacks an area code, the area code can be determined using the related location.

Cloud Dataflow is very useful for both stream and batch data transformation.

Data Analysis

Several strategies may extract relevant information from data during the analysis stage. Statistical methods are frequently employed with numerical data to accomplish some of the following:

Mean and Standard deviation of the dataset

Correlations between variables

Cluster parts of a dataset into a group of similar entities.

Data Engineers analyse text using various operations, such as grouping by entities such as names, races, addresses, and specialisations from data.

Cloud Dataproc, BigQuery, and Cloud Dataflow are very useful for data analysis.

Explore and Visualise

Investigating the data and testing a hypothesis is often helpful when dealing with new datasets.

Cloud Datalab, a GCP tool for exploring, analysing, and visualising datasets, may be used. You can integrate machine learning libraries like TensorFlow, Matplotlib, Pandas, and Numpy with Datalab.

Google Data Studio is best for tabular reports and basic charts. Users can explore the dataset without writing a single line of code with its drag-and-drop interface.

Final Thoughts

Ingestion is the first stage in the data lifecycle, and it consists of gathering data and delivering it to GCP. The storage stage entails storing data in a storage system to retrieve it later in the data lifecycle. Next, we must convert the data into a format suitable for analytical programs during the process and analysis stages. The final step is to explore and visualise, in which the analysis findings are displayed in charts, tables, and other forms of visualisation for others to use.

The data lifecycle gives a foundation for comprehending the greater context of data and its related fields.