Technical Aspects of Data: Volume, Velocity, Variation, Access, and Security

Selecting Appropriate Storage Technologies In GCP

Aside from the business requirements we highlighted in our previous article when choosing a data storage system. It is essential to evaluate the technical aspect of data and how they influence our choice of data store.

This article evaluates the technical aspect of data that data engineers examine when selecting a data storage system. By the end of this article, you should have a better grasp of the many technical aspects of data that go into choosing the best storage method.

GCP provides numerous data storage services. They are all intended to address some specific scenarios, albeit not all. Therefore, a good understanding of how a particular data storage service on GCP optimises for each technical aspect of data is crucial for performance.

The following are some technical criteria to consider while selecting a storage system:

The Volume of data

The Velocity of data

Variation in schema

Data Access trends

Security Considerations

Volume

Volume refers to the scale of information handled by a data processing system. While some storage services store lesser volumes, others can store massive volumes of data from kilobytes to petabyte scale.

Cloud SQL is often a viable option for applications that require a relational database and only fulfil requests inside a single region. Cloud SQL storage can store up to 64TB of data. The actual limit for SQL Server instances, MySQL instances, and PostgreSQL instances depends on the machine type and whether you are utilising shared or dedicated vCPUs. Shared core capacity is limited to 3 TB, whereas dedicated core capacity is limited to 64 TB.

The maximum size of a single item in Cloud Storage is 5 TB. There is no cap on the number of reads (uploading, updating, and deleting objects) or writes (reading object data, reading object metadata, and listing objects).

Users may store up to 64 TB of data on persistent disks that can be connected to Compute Engine instances. In addition, you can attach up to 127 secondary non-boot zonal persistent disks when creating an instance. The maximum associated capacity for persistent disks per instance is 257 TB.

BigQuery, a managed data warehousing and analytical database, allows for up to 4,000 partitions per table and has no maximum limit on the number of tables in a dataset. If you go beyond this limit, think about employing clustering instead of or in addition to partitioning.

Cloud Bigtable, designed for telemetry data and massive-volume analytical applications, has a maximum storage capacity of 16 TB per node for HDD and 5 TB per node for SSDs. Up to 1,000 tables may be present in one Bigtable instance.

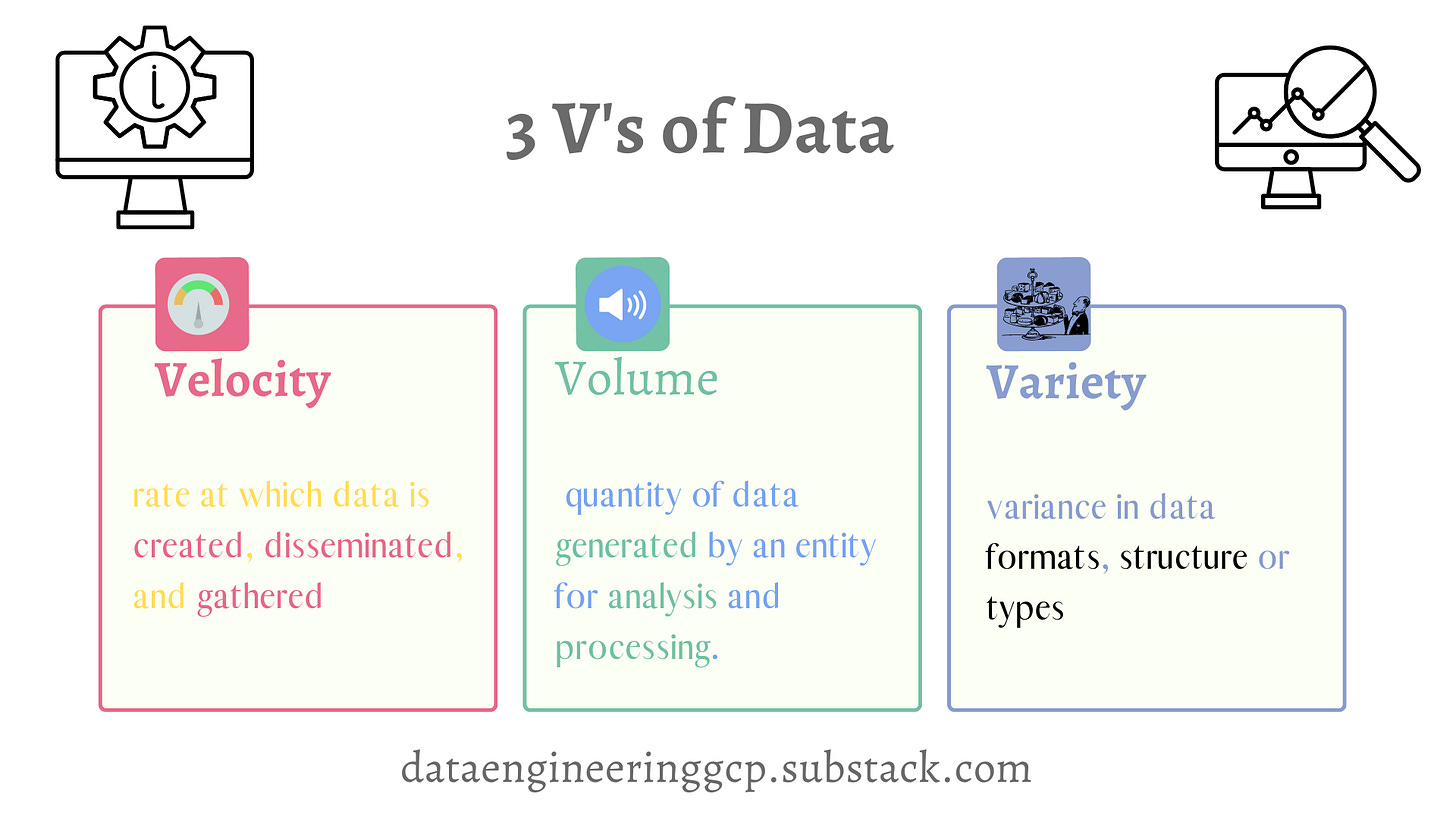

Velocity

Velocity is the speed at which data is processed or used productively, such as ingesting, analysing, and visualising.

We start with processing data in an ad hoc way. Still, we can move up to batched or periodic data processing through near real-time and actual real-time systems. Here are some illustrations of varying rates ranging from low to high velocity.

Weekly upload of data to a data warehouse.

Daily reports on the number of check-ins at a hotel.

Analysis of data from an IoT device in the previous minute.

Real-time alert based on log message as soon as it is generated.

It is crucial to match the velocity of incoming data with the pace at which the data store can write data when it is being ingested and sent to storage. e.g. Bigtable is optimised for real-time velocity data and can write up to 10,000 rows per second using a one-node cluster with SSD or HDD.

If high-velocity data is processed as ingested, it is the correct approach to write the data to a Cloud Pub/Sub topic. The processing application may then access the data conveniently using a pull subscription. Cloud Pub/Sub is an autonomously scalable, managed messaging platform. Users are not required to deploy resources or define scalability settings.

An enterprise may use Transfer Appliance or Storage Transfer Service to upload vast amounts of data to the cloud for low-velocity tasks such as batch and ad-hoc operations.

Variety

Variety is the diversity of data sources, styles, and complexity.

The degree of change you anticipate in the data structure is another vital factor to consider when selecting a storage technology.

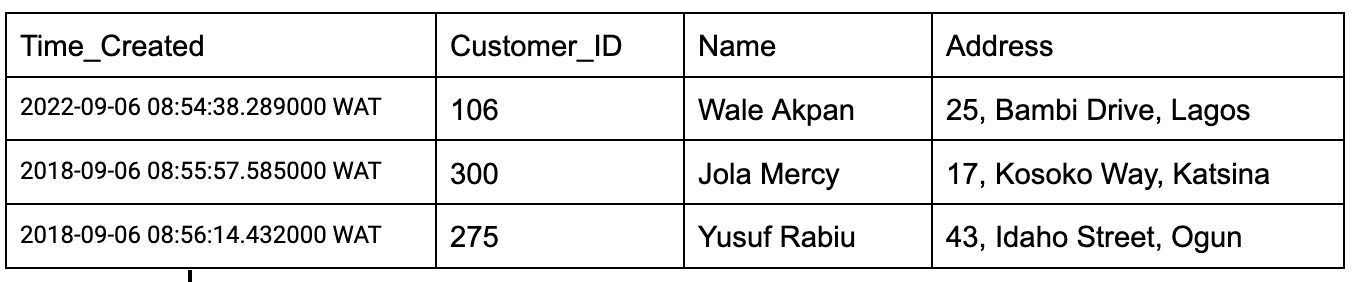

There are data structures with little variability. As an illustration, the data format of customers’ information shows Time_Created, Customer_ID, Name and Address hardly ever changes. Unless there is a problem, such as a failed message flow or invalid data, every data transferred to the storage system will include those four details.

Some scenarios do not fit well into the rigid structure of relational databases. NoSQL databases, such as MongoDB, DynamoDB, Google Cloud Firestore and CosmosDB, are examples of document databases. These databases reflect many diverse attributes using sets of key-value pairs.

Example of structured, relational data.

In a document database, we may define the data in the second row using a structure similar to the following:

{

'Time_Created': '2018-09-06 08:55:57.585000 WAT', 'Customer_ID': '300', 'Name': 'Jola Mercy', 'Address': '17, Kosoko Way, Katsina',

}It is not required to utilise a document database structure for the example above because most entries in a table showing customer details will have the same characteristics.

Think about a company that conducts several business deals. Below is an illustration of how a withdrawal and a subscription transaction may be defined:

{

{

'id': '345',

'type': 'withdrawal',

'source': 'application',

'amount': '4000',

'narration': 'null'

}

{

'id':'6756',

"type":

{ 'Main_type': 'Subscription' 'sub_type': 'Dstv'

}, 'iuc_number': '6784765789', 'source': 'website',

'amount': '2000',

'narration': 'ddhfh'

}

}Data Access

Data is accessed in many ways depending on the scenario. For example, analysts may view data from sensors as soon as they are available; however, they are unlikely to be accessed beyond a week. At the same time, data that has been backup may be viewed fewer than once per year.

The following are some criteria to keep in mind regarding data access patterns;

How frequently is data ingested and accessed?

How much data is written during ingestion to storage?

How much data is accessed during a read operation?

Different read and write operations apply to little and large volumes of data. One example is reading or writing telemetry data. Depending on the use case, writing data from a transaction system may also involve a little or large volume of data. These will necessitate different types of databases. For example, telemetry data may be better suited to Bigtable owing to its low-latency writes. In contrast, transaction data is an excellent use case for Cloud SQL since it enables more I/O operations to satisfy relational databases.

Cloud Storage offers large-scale data ingestion solutions like Cloud Transfer Service and Transfer Appliance.

Enormous amounts of data are also typically read in BigQuery. However, we regularly read a few columns over a high number of rows. BigQuery works best for such types of reads by using a Capacitor. Capacitors, a columnar storage structure, are built to store semi-structured data with nested and repeating fields.

Users can now determine the appropriate storage technology for a specific scenario by emphasising essential aspects required to support data access patterns.

Security Consideration

Access restrictions will vary depending on the storage system. For instance, access restriction for cloud storage is coarse-grained. i.e. all the data contained in a file in Cloud Storage is accessible to everyone who has access to that file.

When specific individuals are allowed only a part of a dataset, database administrators may store the data in a relational database. Hence, users can access a view that contains just the data needed.

By default, all Google Cloud storage services enable encryption at rest which is crucial for data privacy compliance. Therefore, restricting access to data is essential when selecting a storage service.

Final Thoughts

While the technical characteristics of data are not exhaustive, this article provides a fundamental grasp of what is essential when selecting a storage system.

Most storage systems are optimised for different scenarios; you may have to embrace a tradeoff of high read for low write or throughput for latency.

Now that you are familiar with some of the technical aspects of data. You can apply your skills to determine the storage technologies that are currently best suited for your application and workload.